What is a vector database? What you need to know [2025]

Discover what you need to know about vector databases. See what they are, how they work, their benefits, examples, use cases, and more.

![What is a vector database? What you need to know [2025]](/_next/image?url=https%3A%2F%2Funable-actionable-car.media.strapiapp.com%2FWhat_is_a_vector_database_What_you_need_to_know_4cd16288b8.png&w=3840&q=75)

In this article

Vector databases store data as high-dimensional embeddings representing semantic relationships between data points. Unlike relational databases that rely on strict schema-based queries, vector databases rely on embeddings derived from models like Word2Vec, BERT, or CLIP to perform similarity-based searches.

Instead of looking for exact keyword matches, they measure vector distances in multi-dimensional space to retrieve semantically related information. The closer the match, the better the result.

Vector databases are distinguished by their focus on speed, scale, and flexibility. They retrieve results in milliseconds.

So, how do these vector databases work? They boost accuracy and uncover hidden patterns through AI insights but demand strong infrastructure. And since they require fine-tuning to stay fast and responsive, the importance of the right setup cannot be understated.

Traditional databases want structure and precision. Vector databases thrive in chaos. One tracks exact matches while the other finds meaning. Put simply, there wouldn’t be AI-powered search and personalization without them.

Looking for an example? Think about how streaming platforms always predict your next potential binge. Or what about those e-commerce sites that always know what you want as soon as the weather changes, etc.? This is just a minuscule sample of how vector databases influence your life.

Famous names come to mind: Meilisearch, Pinecone, Qdrant, Milvus, and Chroma lead the way. Each pushes performance to the limit while making AI search faster and your life easier.

So, now that you know what vector databases are and what they do, let’s take a deeper look into their workings, pros and cons, and how they affect your daily life.

Ready? Let’s start.

What is a vector database?

The best way to describe traditional databases is to compare them to a filing system. Basically, they store data in rows and columns. While this structure makes sense for numbers and categories, it loses efficacy when dealing with meaning.

Vector databases work and store data differently. They rely on embeddings generated from transformer-based models, neural networks, or principal component analysis (PCA)-optimized reductions. These embeddings encode the semantic and syntactic meaning of data points.

When a query is submitted, the input is transformed into a query vector, which is then compared against stored embeddings using approximate nearest neighbor (ANN) search methods such as hierarchical navigable small world (HNSW) graphs or inverted file (IVF) indexing to locate the closest matches.

Every word, image, or sound carries weight. And a vector database gets what it means. It breaks it down into vector data (numbers) and maps out relationships instead of just stacking up facts. Why? So similar ideas stick together, and unrelated ones drift apart.

Type in “comfy running shoes,” and you’re not just getting a bunch of listings with those exact words. You’ll see sneakers built for all-day wear, the right arch support, and that perfect bounce — even if “comfy” isn’t in the product name. That’s vector search doing its thing. Yes, it’s not just a theoretical tutorial. It knows what you’re looking for, not just what you typed.

E-commerce sites, generative AI assistants, and search engines run on this tech. They think through results and give you answers that make sense.

Now that we’ve nailed down why vector search changes the game with its search capabilities, let’s break down how it works. From vector representation to similarity search, every piece plays a role.

How does a vector database work?

Raw data is messy. A vector database cleans it up and makes sense of it. Before a query can be processed, raw data undergoes several preprocessing steps, including tokenization (for text), Fourier transforms (for audio), and feature extraction (for images).

The data is then mapped into a high-dimensional vector space using a pre-trained or fine-tuned model to produce a query vector. Many vector databases employ dimensionality reduction techniques like PCA, t-SNE, or autoencoders to reduce computational complexity while maintaining vector fidelity.

When searching for something, the database generates a query vector representing your input. This vector is then compared against stored embeddings to find the most relevant results.

Vector representation

Everything in a vector database starts as a number. Text, photos, and audio get converted into high-dimensional points. Each vector holds context and understands relationships between words, objects, and ideas. Learn more about how vector embeddings work.

Let’s say you search for "apple." Are you buying fruit? Upgrading your iPhone? Shopping for a candle that smells like fresh-picked Granny Smiths? A vector database figures it out instantly. It looks at context and connects ideas to get exactly what you need instead of random results.

Indexing mechanisms

Billions of vectors, but only one answer matters. Instead of scanning millions of records one by one, a vector database uses techniques like HNSW and IVF to quickly locate vectors that closely match the query vector.

Fancy names, simple concepts. Hierarchical Navigable Small World (HNSW) graphs use layered graphs with greedy search heuristics to traverse vector space efficiently, whereas Inverted File Indexing (IVF) clusters similar vectors into partitioned regions.

Similarity measures

Vector databases work by comparing data. The closer two vectors are, the more alike they seem.

Vector databases compute similarity based on various distance metrics, including:

- Cosine Similarity (CS): Measures angular similarity between two vectors and is widely used in text embeddings.

- Euclidean Distance (ED): This calculation of the straight-line distance between two points in high-dimensional space is commonly applied in image recognition.

- Dot Product Similarity (DPS): Preferred in deep learning models, particularly for ranking relevance scores in transformer-based embeddings.

This is how AI recommends songs, movies, TV shows, and products. It ranks results based on meaning, not just matching letters. That’s why vector databases power the smartest search engines today.

Next, let’s explore the features that make vector databases so powerful.

What are the key features of a vector database?

Traditional databases hoard data. They store words, numbers, and files, but they do not understand them. Vector databases, on the other hand, actually understand what is inside.

Let’s take a look at some of the features that make them what they are:

Efficient similarity search

Basic search engines are blind. They match words, not meaning. You search for “gaming laptop,” and suddenly, every laptop with “gaming” in the name pops up. Yeah, even the weak ones that can barely run Minecraft.

However, vector search understands what makes a laptop suitable for gaming. It looks at specs like GPU, refresh rate, cooling system, and what other gamers prefer. That’s why AI-powered search and recommendations make sense instead of just throwing random laptops at you.

High-dimensional data handling

Some data is simple. Some data is a beast. A vector database does not flinch either way.

A streaming service does more for you than just track movie titles. It factors in genre, pacing, cinematography style, and even mood. That is why it suggests something you don't even know you wanted to watch. A traditional database would list movies that share a few keywords in the description.

Scalability

Data keeps growing, and search loads keep getting heavier. When this happens, a traditional database starts lagging. Alternatively, a vector database just gets better.

Vector databases achieve scalability through sharding, distributed search partitions, and GPU acceleration. Frameworks like FAISS (Facebook AI Similarity Search) utilize GPU-based parallel processing to efficiently handle high-throughput queries.

Unlike traditional databases that rely on B-tree or hash-based indexing, vector databases use quantization (PQ, OPQ) and ANN pruning to reduce the computational load while preserving recall rates.

Integration capabilities

A vector database pretty much supercharges AI models.

It slots into search engines, fraud detection models, and chatbots without friction. It helps AI think faster, predict better, and deliver results that make sense.

For text-based applications, it integrates with Transformer models like BERT, GPT, or Sentence-BERT, while vision-based retrieval systems utilize CLIP or DINO embeddings.

Now, let’s talk about how businesses use vector databases to stay ahead of the curve.

What are the advantages of using a vector database?

Speed. Accuracy. Relevance. A vector database delivers all three without breaking a sweat.

AI search, personalized recommendations, massive-scale data management — none runs smoothly without vector search in the mix.

Now, let’s talk about why businesses are betting on vector databases.

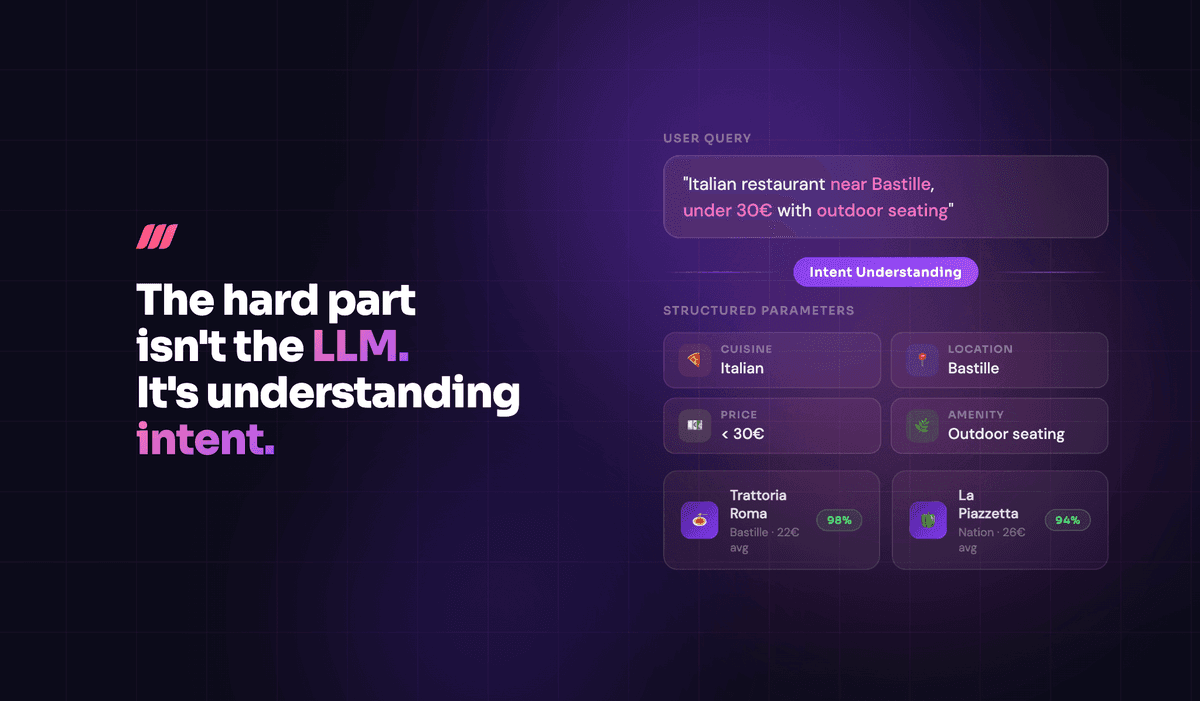

1. Context-aware semantic search

Ever tried looking up a movie but couldn’t remember the title? You type in “that space movie where time moves slower on a planet,” and somehow, the system knows you mean Interstellar. That’s not luck. That’s a vector database doing its thing.

A basic keyword search would need an exact match — so good luck if you can’t remember the title. On the other hand, a vector search knows that “space,” “time dilation,” and “father-daughter space story” all relate to Interstellar (okay, and a few other movies too). Fascinating, isn’t it?

Let’s look at another example. Hugging Face integrates Meilisearch to facilitate search in over 300,000+ AI models, datasets, and demos to ensure that domain-specific queries yield highly relevant results. Without vector search, a keyword-based approach would fail to grasp relationships between similar AI models or datasets.

2. High-performance, low-latency queries

People are gone if search results take more than a second to load. Nobody has the patience for slow results, and with a vector database, they don’t have to wait.

Retail search must balance speed and accuracy, especially in real-time customer interactions. Louis Vuitton deploys Meilisearch in brick-and-mortar stores to enable instant and context-aware product searches.

Imagine a medical research lab running a database of genetic mutations. A scientist types in a query, looking for similar mutations that have led to breakthrough treatments. A standard database would churn through millions of records, and waste time they don’t even have. A vector database, meanwhile, would find patterns instantly and bring up the closest matches before the coffee gets cold.

3. Precision AI recommendations

You’re watching a true crime documentary on Netflix. Next thing you know, the platform suggests a legal drama and a psychological thriller—but not just any thriller. One with the same kind of suspense, pacing, and dark undertones.

That’s not luck. It’s a vector database working behind the scenes, tracking viewing habits, detecting subtle patterns, and recommending something that fits your taste.

Search-driven personalization is crucial for user engagement. Bookshop.org reported a 43% improvement in purchase conversion after integrating Meilisearch to match books based on themes, genres, and user preferences rather than relying solely on title and author keywords.

4. Self-learning AI with vector insights

A machine learning model is only as smart as the data it’s fed. Garbage in, garbage out. A vector database makes sure AI gets the good stuff.

Take self-driving cars. They rely on massive amounts of visual and sensor data. A basic database would treat each image or sensor reading as a separate entry. A vector database sees the whole picture, analyzing millions of tiny details — road signs, pedestrian movements, weather conditions — and making split-second decisions.

That’s why autonomous systems, fraud detection, and AI chatbots depend on vector search.

5. Scalable high-dimensional search

More data? No problem. More users? Bring it on. More queries? This thing was built for that.

Say you run a global hiring platform. Recruiters are searching for candidates with specific skill sets across industries, experience levels, and locations. A traditional database slows down as searches get more complex. But not a vector database. In fact, it processes queries across millions of resumes in seconds by ranking candidates based on actual qualifications. Whoops, no more LinkedIn keyword hacks.

What are the disadvantages of using a vector database?

Vector databases are fast, powerful, and game-changing for AI and search. But let’s not kid ourselves — they’re not perfect. They need serious computing muscle, careful setup, and a solid game plan to run at full speed.

Think of them like a high-performance sports car. Unmatched acceleration, and precision handling, but not built for every road. Let’s break down the trade-offs.

1. Heavy on the computing power

Vector search burns through processing power. Every time you hit search, it runs complex calculations across thousands of dimensions. Without the right hardware, it lags, stalls, and struggles to keep up.

Bildhistoria maintains a vast archive of historical photographs. High-speed retrieval across millions of image embeddings is necessary. Similarity searches struggle with excessive compute requirements without GPU acceleration or optimized indexing. A powerful system is the only way to keep up with real-time queries.

2. Setup isn’t plug-and-play

SQL databases? Set it and forget it. Vector databases? Not that simple.

Tuning indexing methods and similarity models takes serious know-how. Miss a setting, and performance tanks fast.

A research lab processing millions of medical scans needs precision. If the system isn’t configured right, scientists waste time waiting instead of making breakthroughs.

3. Storage adds up fast

Vector databases eat storage for breakfast. One file can have thousands of dimensions that stack up really fast.

A video platform tracking every scene, sound, and subtitle? Data management overload. Without smart compression, storage gets out of hand — fast.

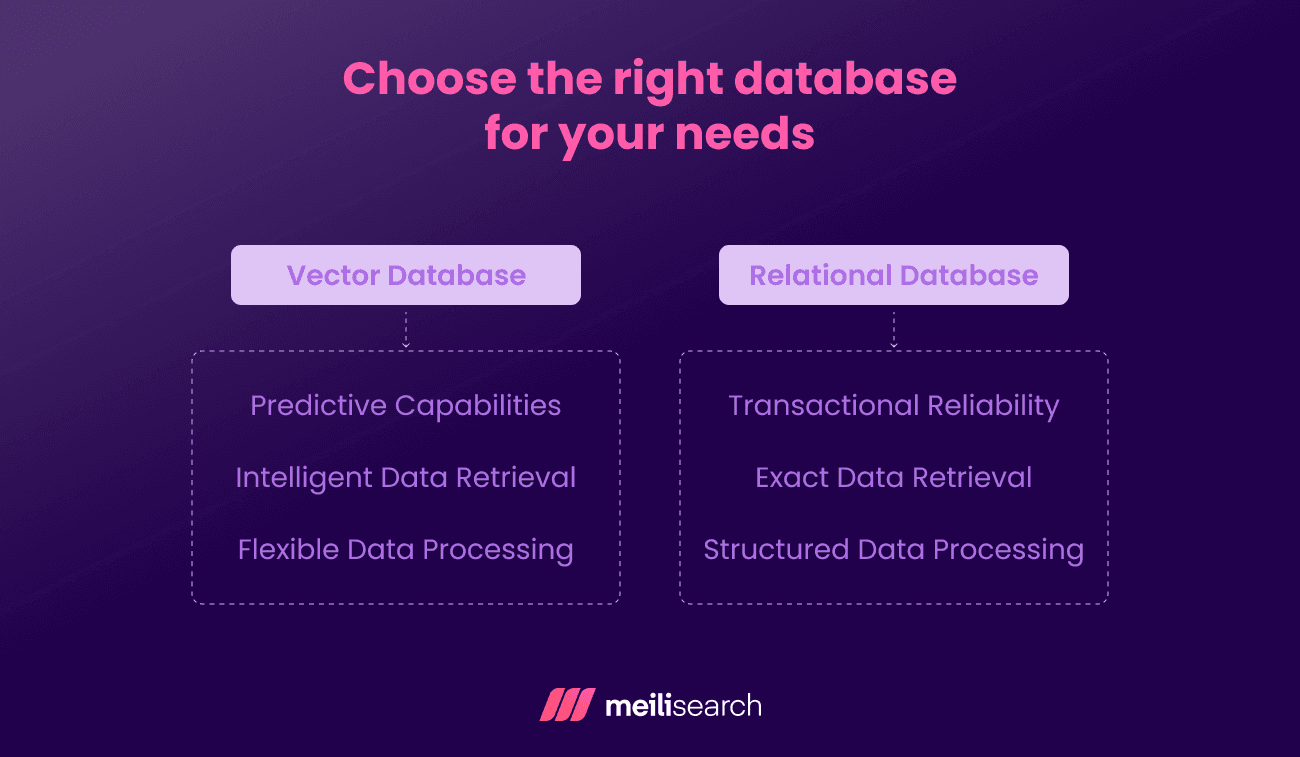

4. Not always the right tool for the job

Vector search crushes AI-powered recommendations and deep search. However, structured data points are not a strong suit.

If you need to track sales, invoices, or inventory, stick with relational databases. Vector search is built for meaning, not simple lookups.

Vector databases shine where AI and search demand precision. But how do they compare to traditional databases? Let’s break it down.

How does a vector database differ from a traditional relational database?

Relational databases love structure. Everything fits into neat rows and columns. That’s great for banking or inventory management. But AI? Search engines? Recommendation systems? They need more flexibility. That’s where vector databases come in.

Schema vs. flexibility

A relational database needs a strict blueprint before storing anything. Every entry must fit the mold. That’s perfect for keeping track of invoices, payroll, or customer orders.

A vector database doesn’t need a fixed structure. It handles text, images, and audio without breaking a sweat. AI-powered search thrives on that kind of freedom.

Exact queries vs. semantic understanding

A relational database retrieves what you ask for. No more, no less. That’s great when looking up a specific purchase order or ID number.

A vector database figures out what you mean. Searching for movies with the same feel as The Dark Knight will get you films with similar themes, pacing, and cinematography.

SQL constraints vs. adaptive algorithms

While SQL databases optimize for ACID (Atomicity, Consistency, Isolation, Durability) compliance, vector databases prioritize eventual consistency and high-throughput queries. They often trade strict transactional guarantees for performance gains.

Vector databases use cosine similarity and Euclidean distance to measure relationships. Their image recognition capability helps them recognize patterns. That’s how Spotify recommends songs before you know what you’re in the mood for.

Data logging vs. predictive insights

A relational database tracks purchases, bookings, and payments; it is perfect for structured, real-time updates.

A vector database predicts what will happen next. Instead of recording what someone bought, it suggests what they want next.

Structure vs. discovery

One keeps things organized, and the other finds hidden connections. Need structured data points with pinpoint accuracy? Relational databases win. Need AI-powered insights that go beyond the obvious? Vector databases are the way to go.

Let’s look at real-world use cases where vector search makes all the difference.

What are common use cases for vector databases?

AI, search engines, and recommendation systems depend on vector databases to connect dots traditional databases can’t even see. Whether it’s predicting your next binge-watch or stopping fraud before it happens, vector databases drive the smartest tech on the planet.

Recommendation engines

Streaming services like Netflix and Spotify process vast amounts of content by mapping each item into a high-dimensional vector space. In this space, genre pacing and narrative style align based on mathematical proximity rather than predefined categories. There’s more…

Instead of applying rigid genre tags that often fail to capture nuance, recommendation engines leverage cosine similarity and latent factor models to surface highly relevant recommendations without requiring exact keyword matches.

Image and video retrieval

Text-based search often fails when dealing with images because it depends on manually assigned metadata that doesn’t always accurately reflect visual details. Vector databases allow AI systems to process color texture and spatial relationships within images by converting them into embeddings that encapsulate visual features numerically.

Google Photos (the Photos app on your iPhone, too) can find every beach trip you’ve ever taken — without you tagging a thing. Pinterest can match the exact shade of blue in your outfit. Vector search is a straight-up reading of patterns, textures, and shapes as a human would.

Natural language processing (NLP)

Chatbots used to be as clueless as your grandma texting for the first time. Now? Your bank’s support chatbot sounds almost human. With vector indexing, AI systems like ChatGPT, Google Bard, and Meta’s Llama analyze user queries in high-dimensional space and identify the intent rather than just recognizing specific words.

Vector index powers AI that understands the intent behind our words. That’s why chatbots respond with context, AI assistants remember what we ask, and language models write like they have a personality.

Semantic search

Google understands meaning now. Apologies for stating the obvious.

If you type “cheap flights to LA,” you get the best deals, not just pages stuffed with “cheap” and “flights.” Ask Alexa what happened in sports today, and it pulls headlines, not just random pages with “sports” in the title. That’s vector search reading between the lines.

Ranking models such as BM25+ and transformer-based embeddings refine these results by structuring search outcomes to prioritize user needs rather than simple keyword density.

Similarity search

Ever wish you could describe a vibe instead of a thing? Vector databases make that possible through vector similarity. Vector search maps product descriptions, customer reviews, and visual features into high-dimensional spaces where similarity is determined mathematically rather than by direct keyword overlap.

Amazon’s "Find Similar Items" feature uses vector embeddings to analyze product descriptions, user reviews, and visual attributes to recommend alternatives.

If a shopper searches for "minimalist wooden coffee table", traditional keyword search might return any table with "wood" in the title. Vector search instead maps the product’s dimensions, material, style, and customer ratings to find near-identical designs even if the seller describes them differently.

Retrieval augmented generation (RAG)

AI used to rely on pre-set knowledge. Now, it learns as it goes.

Traditional AI models like GPT-3.5 and GPT-4 rely on pre-trained knowledge, which means their responses are only as up-to-date as their last training cycle.

However, vector-powered retrieval systems allow AI to access real-time, domain-specific information. This naturally boosts accuracy and relevance.

Anomaly detection

Spotting fraud used to be a slow process. Not anymore.

For example, Visa’s AI-driven fraud detection examines transaction vectors by considering factors like location, transaction history, device fingerprints, and spending patterns.

Suppose a card suddenly processes an unusually high-value purchase in a foreign country without prior travel history. In that case, Visa’s AI detects the anomaly and may instantly flag or block the transaction.

What are some examples of popular vector databases?

Vector databases make AI smarter and search engines faster. Some are built for speed, others for handling massive datasets. Choosing the right one depends on what you need. Let’s look at the top players and what makes them great.

1. Meilisearch

Meilisearch delivers instant search results while handling typos like a pro. It combines vector search with full-text search, which we already know makes it perfect for e-commerce, SaaS, and content platforms.

Its hybrid approach lets users find what they need even if they don’t type the exact words. The flexible API makes integration easy, and real-time indexing keeps data fresh. After all, nobody has the time to correct their mistakes, especially on Google Search. Meilisearch is great because it:

- Blends full-text and vector search for better accuracy

- Updates data instantly without lag

- Scales smoothly for large apps

2. Pinecone

Pinecone removes the hassle of managing infrastructure. It’s fully managed so teams can focus on AI models instead of database maintenance.

It delivers real-time filtering and keeps search results sharp as new types of data come in. It scales on its own, so one can rest assured that the performance stays fast no matter the size of the dataset.

Key features:

- No setup or maintenance needed

- Real-time filtering keeps search results relevant

- Handles billions of vectors without slowing down

3. Qdrant

Qdrant is a high-speed, open-source vector database. It’s designed for AI-powered search and allows fine-tuned ranking to improve relevance.

It perfectly complements machine learning pipelines, making AI models more effective over time. Distributed processing allows it to scale across multiple machines when needed.

Key features:

- Open source and flexible for AI apps

- Custom ranking improves search results

- Supports multi-node deployments for high efficiency

4. Milvus

Milvus is designed for deep learning and massive datasets. It’s a favorite in industries like biotech, finance, and cybersecurity.

It supports GPU acceleration, which means faster search and analysis. With enterprise-level scalability, it handles huge AI workloads without breaking a sweat.

Key features:

- Optimized for deep learning and AI

- Uses GPU power for fast performance

- Trusted by enterprise companies in biotech, finance, and security

5. Chroma

Chroma helps LLMs (large language models) retrieve data in real time. AI assistants and chatbots use it to stay updated with the latest knowledge.

It’s a top pick for Retrieval-Augmented Generation (RAG), helping AI pull fresh, relevant data instead of relying on outdated training models.

Key features:

- Perfect for AI-powered search and chatbot training

- Fast and memory-efficient for large-scale applications

- Works with OpenAI, Hugging Face, and other AI frameworks

Each database has strengths based on different metrics. Meilisearch is a great choice if you need a fast, typo-tolerant search. Pinecone is a strong option if scalability and automation matter.

Looking for open-source flexibility? Qdrant delivers. Need deep learning support? Milvus is built for it. If LLMs and AI assistants are your focus, Chroma stands out.

Regardless of your choice, vector search is shaping the future of generative AI and search technology.

Vector databases power the internet as you know it

Vector databases are not some distant dream. Yes, they exist right now and transform how we use data. They drive AI search to process huge amounts of data per second. Yes, they’re behind those Netflix suggestions as well. If speed, accuracy, and scale matter, vector search earns a spot on your radar.

Big companies and startups trust vector databases to sharpen their generative AI models. E-commerce sites use them to predict what customers want. Streaming services seem to read your mind and suggest the perfect movie. Fraud detection systems catch culprits before they strike.

No more basic keyword matching. No more rigid, lifeless queries. Vector databases grasp your intent, not just your words. Vector databases drive intelligent search, powering AI recommendations, real-time retrieval, and fraud detection at scale.

Start with a 14-day free trial of Meilisearch Cloud or request a demo to discuss your needs with our search experts.

Frequently asked questions (FAQs)

How do vector databases store and index data?

They convert text, images, and sound into numerical vectors. These vectors live in a high-dimensional vector space where similar items lie close. They use efficient indexing methods that cut search time to milliseconds to boost speed.

What are the different indexing techniques used in vector databases?

Common choices include Hierarchical Navigable Small World (HNSW) and Inverted File Index (IVF). HNSW builds a fast graph for search, while IVF groups similar vectors for quick lookup. Some databases use product quantization to reduce vector size and save storage. Vector databases also use Locality-Sensitive Hashing (LSH) to group similar vectors efficiently.

How do vector databases handle large-scale data?

They scale horizontally by adding more servers instead of overloading one. Distributed architecture spreads the workload, keeping performance high even with billions of records. Cloud-native options optimize cost and efficiency.

How does similarity search work in a vector database?

Similarity search relies on Approximate Nearest Neighbor (ANN) techniques to quickly find and rank relevant results from the search ecosystem. It measures the distance between vectors. The closer two vectors lie, the more alike they appear. Techniques such as cosine similarity, Euclidean distance, and dot product drive this process. This method powers recommendations, AI search, and fraud detection.

How do vector databases integrate with machine learning models?

They fit into AI pipelines seamlessly. ML models produce embeddings; vector databases store them, and similarity search retrieves the top matches. This process makes chatbots, recommendation engines, and AI assistants smarter over time.

How do vector databases compare to key-value stores and graph databases?

Key-value stores fetch exact matches quickly. Graph databases track relationships between nodes. Vector databases find similarities within unstructured data. Each serves a purpose, but vector search leads in AI applications.

What industries benefit the most from vector databases?

Tech, finance, e-commerce, cybersecurity, and healthcare all reap rewards. Any sector that uses AI search or recommendations needs vector databases. Fraud detection, content moderation, and real-time personalization all benefit.

What are the performance considerations when choosing a vector database?

Look at latency, scalability, and accuracy. Some databases excel with large datasets, while others focus on low-latency search. The best choice depends on your use case, budget, and AI needs.