How to cache semantic search: a complete guide

Learn how to cache semantic search to slash API costs and response times. Discover practical strategies for implementing caching.

When your AI application starts hemorrhaging $500 daily in OpenAI API calls and users are drumming their fingers waiting 2 seconds for responses, semantic caching isn't just a nice-to-have—it's a lifeline.

While traditional caches match queries word-for-word, semantic caching understands the deeper meaning.

In this guide, you'll discover how to implement semantic caching that slashes response times and API costs. Whether you're building a chatbot, search engine, or AI-powered application, these strategies will help you create lightning-fast, cost-effective semantic search that keeps users happy and your infrastructure lean. You'll learn how to:

- Reduce response times dramatically

- Achieve massive API cost savings

- Handle similar questions without repeatedly querying language models

Introduction to semantic caching

Semantic caching revolutionizes how we store and retrieve information in modern applications. Traditional caching relies on exact matches, while semantic caching understands the meaning behind queries. This allows systems to recognize and respond to similar questions, even if they're worded differently.

This technique is valuable for AI-powered applications. Processing similar queries repeatedly can be both time-consuming and expensive.

What is semantic caching

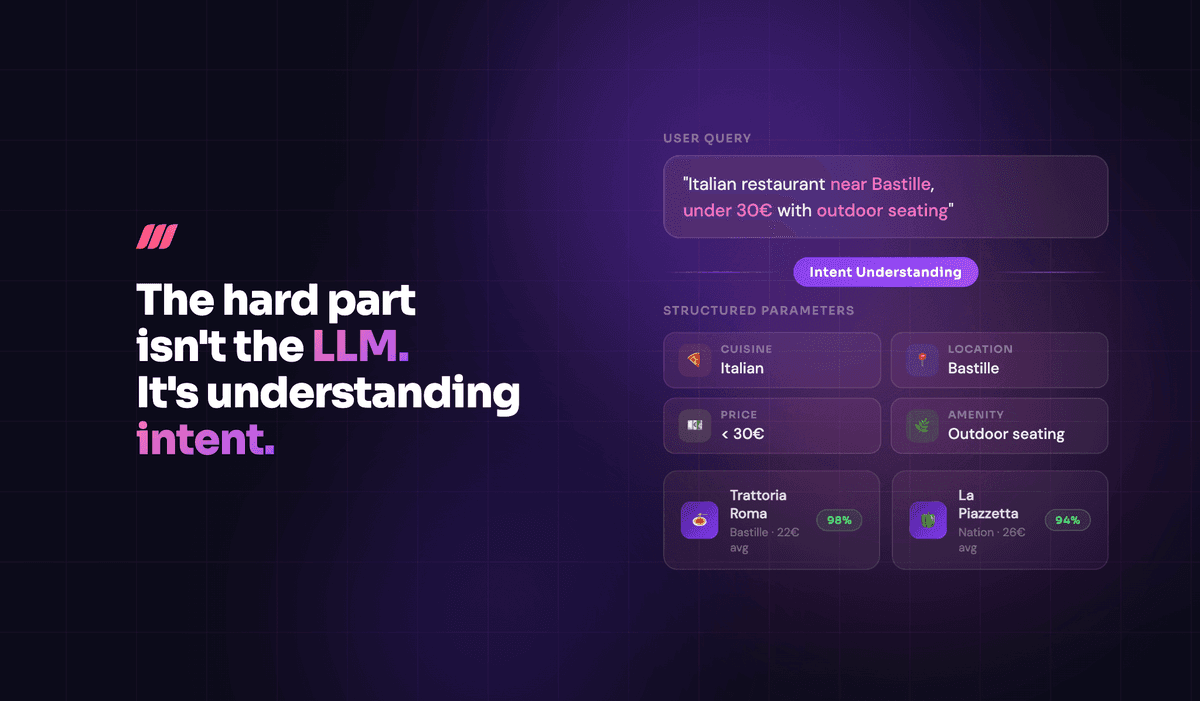

Semantic caching is a sophisticated data retrieval technique. It stores both the data and the contextual meaning of queries and their results. When a user asks a question, the system analyzes its semantic meaning rather than looking for an exact match.

For example, "What's the weather in New York?" and "Tell me New York's forecast" can pull from the same cached response, saving processing time and resources.

Comparing semantic caching vs traditional caching

Traditional caching and semantic caching serve different purposes in modern applications. Here's a comparison:

- Traditional caching: Relies on exact matches and specific keys to retrieve data. Think of it as using a filing cabinet where you need the exact file name.

- Semantic caching: Understands context and meaning. It's like having a smart assistant who can find related information even when you ask in different ways.

Advantages of semantic caching

Semantic caching offers several advantages for real-world applications:

- Reduced API costs by avoiding redundant queries.

- Faster response times for similar questions.

- More consistent answers across related queries.

- Better scalability for AI-powered applications.

When to choose semantic caching

Consider semantic caching for your application if:

- Users might ask the same question in different ways.

- Response consistency is crucial.

- API costs need to be optimized.

- Real-time response is essential.

Core concepts of semantic caching

Understanding the fundamental components and applications of semantic caching is crucial for successful implementation. Let's explore how these elements work together to create efficient caching systems that enhance your application's performance.

Key components of semantic caching systems

Several critical components form the foundation of semantic caching.

- Embedding model: Converts queries into vector representations capturing their meaning.

- Vector database: Stores these vectors, allowing for quick similarity searches for new queries.

- Cache layer: Sits between your application and underlying systems, managing query-response pairs and their semantic meanings. It decides whether to serve cached content or process new requests.

- Vector search mechanism: Evaluates incoming queries against stored embeddings using similarity thresholds to determine cache hits.

Semantic cache in RAG

Retrieval-Augmented Generation (RAG) systems use semantic caching to improve performance and reduce costs. When a user submits a query, the system first checks the semantic cache for similar questions before going through the full retrieval and generation process. This is especially effective for handling common queries in customer support or knowledge base applications.

The integration process involves three main steps:

- Embed the incoming query into a vector representation.

- Search the cache for semantically similar queries.

- If a match is found, return the cached response immediately. If not, proceed with the traditional RAG pipeline and store the new result for future use.

By understanding these core concepts and components, you can effectively implement and optimize semantic caching in your AI applications.

Implementing semantic caching for an e-commerce site

Semantic caching can significantly enhance how e-commerce platforms handle product searches and customer queries. By adopting a smart caching mechanism, businesses can boost search performance and improve user experience.

We will go through these key steps:

- Configure embedding model by initializing OpenAI embeddings and Meilisearch client with appropriate connection parameters.

- Create a vector store with custom configuration, specifying index name, embedding dimensions, and text key for efficient semantic search storage.

- Implement semantic search function to convert queries into vector embeddings and perform similarity-based retrieval from the vector store.

- Develop cache management logic to handle cache hits and misses, including fallback mechanisms for database searches and optional cache updates.

Setting up the semantic caching infrastructure

Building a semantic caching system requires careful planning and the right tools. Here's a step-by-step guide to implementation.

Choosing core technologies

Start by selecting the technologies for your system. Meilisearch is an excellent vector search engine for semantic caching. For this example, we'll use Python with OpenAI embeddings to create a context-aware caching solution.

Installing required dependencies

Set up your project environment by installing the necessary libraries:

pip install meilisearch langchain openai python-dotenv

This command installs essential tools like Meilisearch for vector storage and LangChain for embedding management.

Configuring the embedding model

The core of semantic caching is converting queries into meaningful vector representations. Below is a sample configuration using OpenAI embeddings:

from langchain.embeddings.openai import OpenAIEmbeddings import meilisearch # Set up OpenAI embeddings embeddings = OpenAIEmbeddings() # Initialize Meilisearch client client = meilisearch.Client( url="your_meilisearch_url", api_key="your_api_key" )

Creating a vector store for semantic caching

Setting up a vector store is crucial for implementing an effective semantic cache. Here's a comprehensive approach to creating a vector store using Meilisearch and OpenAI embeddings:

# Create vector store with custom configuration vector_store = Meilisearch( client=client, embedding=embeddings, index_name="semantic_cache", text_key="content" ) # Optional: Define embedder configuration embedder_config = { "custom": { "source": "userProvided", "dimensions": 1536 # OpenAI embedding dimensions } }

This setup allows you to create a robust semantic cache that can efficiently store and retrieve contextually similar queries and their responses.

Implementing the semantic cache mechanism

The key to semantic caching is creating a retrieval system that understands query intent.

Creating a semantic search function

Here’s an example of a semantic search function:

def semantic_cache(query, k=3): # Convert query to vector embedding query_embedding = embeddings.embed_query(query) # Perform similarity search in Meilisearch results = vector_store.similarity_search( query=query, k=k ) return results

This function:

- Converts the query into a vector representation.

- Searches for semantically similar results in the vector store.

- Returns the most relevant matches.

Handling cache hits and misses

A robust caching strategy must handle both cache hits and misses effectively:

def process_product_search(query): # Check the semantic cache cached_results = semantic_cache(query) if cached_results: # Cache hit: return cached results return { "source": "cache", "results": cached_results } else: # Cache miss: perform full database search full_results = perform_database_search(query) # Optionally, store new results in cache update_semantic_cache(query, full_results) return { "source": "database", "results": full_results }

Performance optimization techniques

To ensure efficient semantic caching, consider these strategies:

- Set a similarity threshold (e.g., 0.85–0.95) to filter results.

- Limit cache size to prevent memory issues.

- Use time-to-live (TTL) to refresh outdated cache entries.

- Regularly update your embedding model for better accuracy.

Testing your semantic cache

Testing is critical to ensure reliability. Test scenarios should include:

- Exact match queries.

- Semantically similar queries.

- Completely new queries.

- Edge cases with minimal context.

Thorough testing ensures your system performs well across diverse search patterns.

Meilisearch’s fast vector search capabilities make semantic caching not only feasible but also highly efficient for e-commerce platforms.

Adding semantic caching to RAG system

Retrieval-Augmented Generation (RAG) systems can greatly benefit from semantic caching. This integration improves efficiency, reduces costs, and enhances responsiveness in AI applications.

Understanding RAG and semantic caching integration

Semantic caching in RAG systems focuses on storing and retrieving query responses based on their meaning, not just exact text matches. This allows AI applications to quickly access previously generated responses while maintaining contextual relevance.

Key steps in integrating semantic caching into a RAG workflow include:

-

Embedding Transformation: Convert user queries into vector representations that capture semantic meaning. Use embedding models like BERT or OpenAI's embedding APIs for this transformation.

-

Cache Lookup Mechanism: Use a vector database for fast, similarity-based searches. Meilisearch is a strong choice for its efficient semantic search capabilities, enabling quick matches between incoming queries and cached responses.

Implementing the semantic caching layer

Implementing a semantic caching layer requires creating a specialized class that manages query embeddings, similarity checks, and cached response retrieval. This code provides a practical approach to efficiently storing and accessing semantically similar query responses.

class RAGSemanticCache: def __init__(self, embedding_model, vector_store): self.embedding_model = embedding_model self.vector_store = vector_store self.similarity_threshold = 0.85 def retrieve_cached_response(self, query): query_embedding = self.embedding_model.embed_query(query) similar_results = self.vector_store.similarity_search( query_embedding, threshold=self.similarity_threshold ) return similar_results[0] if similar_results else None

This class:

- Embeds queries into vectors.

- Searches for semantically similar results in the vector store.

- Returns a cached response if it meets the similarity threshold.

To ensure your semantic caching layer performs efficiently, apply these strategies:

-

Dynamic Similarity Thresholds: Adjust thresholds based on your domain and use case.

-

Cache Maintenance: Use expiration and refresh mechanisms to keep responses relevant.

-

Multi-Turn Context Tracking: For conversational systems, include conversation history in cache retrieval to maintain context.

Practical implementation example

Here’s how semantic caching can be integrated into a RAG workflow:

def rag_with_semantic_cache(query, rag_system, semantic_cache): # Check semantic cache first cached_response = semantic_cache.retrieve_cached_response(query) if cached_response: return cached_response # If no cached response, use traditional RAG retrieved_docs = rag_system.retrieve_documents(query) generated_response = rag_system.generate_response(query, retrieved_docs) # Store the new response in the semantic cache semantic_cache.store_response(query, generated_response) return generated_response

This function:

- Checks the semantic cache for a response.

- Falls back to the RAG system if no cached response exists.

- Stores new responses in the cache for future use.

Benefits for different applications

Semantic caching is especially useful in:

- Customer support chatbots.

- Enterprise knowledge management systems.

- Educational platforms.

- Research and analytics tools.

Optimization strategies

Once your semantic caching system is running, focus on optimization to ensure it continues to deliver maximum value. Let's explore key strategies to maintain and improve your system's performance over time.

Ensuring accuracy & consistency

Maintaining high accuracy in semantic caching requires regular monitoring and updates.

- Implement version control for cached responses to track changes and ensure users receive the most current information.

- Invalidate or update related cache entries when source data updates to prevent serving outdated information.

- Cache consistency becomes particularly important in distributed systems. Use a publish-subscribe system to propagate updates across your infrastructure, ensuring all cache nodes remain synchronized.

- Regular validation of cached responses helps maintain quality. Set up automated checks to verify cached responses still match their original context and meaning. Periodically compare cached responses against fresh generations to identify any drift in accuracy.

Improving performance and efficiency

Performance optimization starts with careful monitoring of key metrics. Track cache hit rates, response times, and system resource usage to identify bottlenecks and optimization opportunities. Use dashboards to visualize these metrics and establish baseline performance expectations.

Consider these advanced optimization techniques:

- Predictive caching for frequently accessed queries.

- Tiered caching with different storage solutions for hot and cold data.

- Optimize vector similarity calculations for faster retrieval.

- Compress embeddings while maintaining accuracy.

Regular performance audits help identify improvement areas. Compare your system's performance against benchmarks and adjust your caching strategy accordingly. Document performance improvements and their impact on user experience and operational costs.

Common pitfalls and how to avoid them

Over-aggressive caching can lead to memory issues and degraded performance. Start with conservative cache sizes and grow based on actual usage patterns rather than theoretical maximums.

Poor similarity threshold selection represents another challenge. Setting thresholds too low leads to false positives and incorrect responses. Setting them too high results in unnecessary cache misses. Monitor your threshold settings and adjust based on user feedback and performance metrics.

Watch out for these additional pitfalls:

- Neglecting cache maintenance and cleanup.

- Failing to handle edge cases in query processing.

- Ignoring the impact of cache invalidation on system performance.

- Not implementing proper error handling and fallback mechanisms.

Focusing on these optimization strategies ensures your semantic caching system remains efficient and effective, providing consistent performance improvements over time.

Semantic caching: the path to high performance search

Implementing semantic caching for your search system is a powerful way to boost performance, reduce costs, and enhance user experience.

By following this guide's systematic approach to setting up semantic caching—from infrastructure assessment to optimization strategies—you'll be well-equipped to handle growing query volumes while maintaining fast response times.

No search engine expertise required! Get started with Meilisearch Cloud and experience an intuitive platform that makes implementing powerful search effortless, even for developers new to search technologies.