How to choose the best model for semantic search

Discover the best embedding model for semantic search. See our model performance, cost, and relevancy comparison in building semantic search.

In this article

Semantic search transforms how we find information online by focusing on understanding the meaning behind the words and phrases in user searches rather than relying solely on word-for-word matching.

Using machine learning (ML) and natural language processing (NLP), it decodes intent, context, and relationships between words, thus allowing for more conversational queries. This makes it a vital tool for businesses needing fast, accurate, and relevant results from large or complex data sets.

Embedding models are ML techniques that render words and phrases into complex numerical representations, which are then classified based on their context and relationships. However, not all models are the same. Accuracy, speed, indexing efficiency, and pricing can vary significantly between them.

Choosing the right semantic search model is crucial for delivering precise, efficient, and scalable search experiences for specific tasks.

Understanding the trade-offs—whether for multilingual support, search latency, or computational efficiency—will help you implement a high-performing semantic search system for your brand.

What is semantic search?

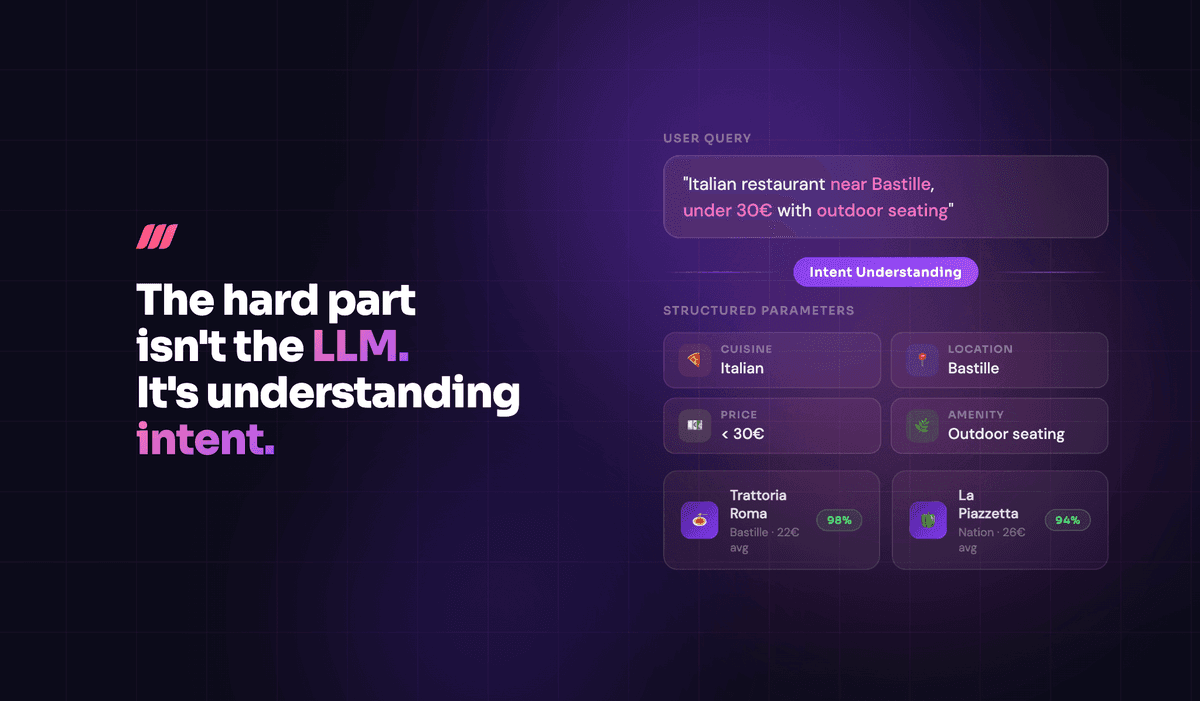

Semantic search is an advanced AI-powered approach to information retrieval that focuses on NLP, ML, and knowledge representation to deduce the intent and contextual meaning of user queries.

Traditional keyword-based search engines match exact strings of words, but that’s not always enough. Semantic search goes further by incorporating named entity recognition, relationships between words, and contextual disambiguation to deliver more relevant results.

Semantic search engines can understand synonyms, paraphrased queries, and even infer implicit meanings exclusively because they use deep learning models such as transformers.

Given its ability to enhance the search criteria, AI-powered search is crucial for applications requiring faster, broader, and even less structured data retrieval.

Why is semantic search important?

Semantic search is important because it improves search capability and relevance, thus helping users find information faster and with fewer irrelevant results. It also reduces uncertainty by interpreting natural language queries more clearly.

Like similarity search, it allows users to phrase their questions conversationally and intuitively rather than using specific—sometimes even long-tail—keywords. Think of it like this: if you can ask a question from your neighbor on a bus, then you can ask it with a quick semantic search.

Additionally, businesses benefit from semantic search by enhancing customer experiences, improving product discovery, and optimizing knowledge management systems.

For example, e-commerce stores like Bookshop.org can boost search-based sales by up to 43% by implementing semantic search tools like Meilisearch.

A core component of semantic search is embedding models through which similarity computations are made possible.

What are embedding models?

Embedding models are machine learning models that transform words, phrases, or documents into dense numerical representations called embeddings.

These vector representations encode word relationships, which allow search engines to compare semantic meaning and context rather than rely solely on exact similarity between words.

For instance, embeddings generated for words like “phone,” “mobile,” and “cell” will be more closely aligned in vector space than, say, “cell” and “bell,” even though the latter two are closer in letter-by-letter similarity.

This allows a search engine to retrieve relevant results even if the exact keyword is absent, underpinning various NLP and LLM (large language model) applications.

What’s the difference between semantic and search embeddings?

Semantic and search embeddings serve distinct purposes in NLP applications, particularly in semantic search.

Semantic embeddings capture the meaning and relationships between words, which is helpful for tasks like document classification, recommendation systems, language translation, and sentiment analysis. They do this by reflecting the words’ conceptual similarities, even if they are not used in the same context.

On the other hand, search embeddings are specifically optimized for retrieval tasks, thus ensuring queries and indexed documents align effectively in vector space to maximize search relevance.

Unlike generic semantic embeddings, search embeddings often incorporate domain- and background-specific optimizations to fine-tune the effectiveness of the specific retrieval activity.

For example, a semantic embedding model might learn that "mac" is related to pasta, the makeup company, and the Apple laptop computer. However, a search embedding model trained on computers and hardware would prioritize the third meaning when processing search queries in that domain.

Embedding models serve as the backbone of semantic search by enabling similarity-based retrieval mechanisms that align with user intent.

What is the role of embedding models in semantic search?

Embedding models power semantic search by converting text into a structured format that can be efficiently indexed and retrieved.

Instead of relying on exact keyword matches, these models compare query embeddings with indexed document embeddings. This enables nearest-neighbor searches and significantly improves retrieval accuracy over traditional algorithms that depend on keyword matching.

These models often use transformer-based architectures such as BERT (Bidirectional Encoder Representations from Transformers), GPT (Generative Pre-trained Transformer), and their derivative neural networks to capture context-aware representations.

That’s how they can handle nuanced queries at scale. For instance, even though “cat” and “bat” sound (and look) similar, only one of them may be used in context as a conventional house pet.

Several fine-tuned and pre-trained models continue to emerge as industry standards for semantic search. Each improves precision, relevance, efficiency, and/or scalability in its own way.

What are the most commonly used models for semantic search?

Different embedding models vary in terms of vector dimensionality, context length, and certain other performance characteristics.

In the context of LLMs, dimensionality points to the number of components in a vector, with each corresponding to an attribute of the encoded variable. Additionally, context length relates to the amount of text—usually measured in tokens—a model can “remember” and refer to at any given time.

To assess these variations, a series of benchmark tests were conducted using Meilisearch, evaluating each model's effectiveness in real-world search scenarios that you will encounter.

These tests measured factors such as retrieval accuracy, indexing speed, and query latency, which is how they evaluated each model’s performance under specific search conditions.

| Model/Service | Dimensions | Context Length |

|---|---|---|

| Cohere embed-english-v3.0 | 1024 | 512 |

| Cohere embed-english-light-v3.0 | 384 | 512 |

| Cohere embed-multilingual-v3.0 | 1024 | 512 |

| Cohere embed-multilingual-light-v3.0 | 384 | 512 |

| OpenAI text-embedding-3-small | 1536 | 8192 |

| OpenAI text-embedding-3-large | 3072 | 8192 |

| Mistral | 1024 | 8192 |

| VoyageAI voyage-2 | 1024 | 4000 |

| VoyageAI voyage-large-2 | 1536 | 16000 |

| VoyageAI voyage-multilingual-2 | 1024 | 32000 |

| Jina Colbert v2 | 128, 96, or 64 | 8192 |

| OSS all-MiniLM-L6-v2 | 384 | 512 |

| OSS bge-small-en-v1.5 | 1024 | 512 |

| OSS bge-large-en-v1.5 | 1536 | 512 |

Your choice of a model for semantic search will depend on a variety of factors, including, but not limited to, its accuracy, computational efficiency, and cost.

What factors should be considered when choosing the best model for semantic search?

1. Results relevancy

Relevance is the cornerstone of effective semantic search, especially in achieving optimal user experience. The right model should balance precision, recall, and speed to ensure users receive highly relevant results without excessive noise.

This balance becomes especially important when comparing hybrid approaches like vector and full-text search. When selecting an embedding model, think about the following:

- multilingual support;

- handling multi-modal data;

- domain-specific performance.

In this context, bigger might not always mean better. While larger models often provide better accuracy, smaller models can offer competitive results at lower computational costs.

Moreover, effectively structuring data, such as with optimized document templates in Meilisearch, can improve search quality.

2. Search performance

Search latency is a critical factor in user experience. Search-as-you-type has become the standard for customer-facing applications as fast, responsive search results improve user engagement and retention.

Local embedding models are ideal to achieve lightning-fast performance, as they eliminate the need for round trips to external services and reduce latency. If you must rely on remote models, hosting your search service close to the embedding service can minimize delays and improve user experience.

The table below showcases latency benchmarks for various local embedding models and embedding APIs. All requests originate from a Meilisearch instance hosted in the AWS London data center.

| Model/Service | Latency |

|---|---|

| Cloudflare bge-small-en-v1.5 | ±800ms |

| Cloudflare bge-large-en-v1.5 | ±500ms |

| Cohere embed-english-v3.0 | ±170ms |

| Cohere embed-english-light-v3.0 | ±160ms |

| Local gte-small | ±20ms |

| Local all-MiniLM-L6-v2 | ±10ms |

| Local bge-small-en-v1.5 | ±20ms |

| Local bge-large-en-v1.5 | ±60ms |

| Mistral | ±200ms |

| Jina colbert | ±400ms |

| OpenAI text-embedding-3-small | ±460ms |

| OpenAI text-embedding-3-large | ±750ms |

| VoyageAI voyage-2 | ±350ms |

| VoyageAI voyage-large-2 | ±400ms |

Benchmarks conducted in Meilisearch highlight the stark differences in latency across various models, with local models achieving response times as low as 10ms while some cloud-based services reach 800ms.

3. Indexing performance

Efficient indexing is another critical factor for the scalability of your search solution. As expected, the time required to process and store embeddings varies widely among models. Notable metrics influencing processing time are API rate limits, batch processing capabilities, and model dimensions.

Local models without GPUs may experience slower indexing due to limited processing power, while third-party services vary in speed based on their infrastructure and agreements.

As mentioned earlier, minimizing data travel time between your application and the model can reduce latency and optimize indexing. Evaluating these factors ensures your chosen model and service can effectively meet your needs.

The benchmark below compares the indexing performance of a collection of 10k e-commerce documents (with automatic embedding generation).

| Model/Service | Indexation time |

|---|---|

| Cohere embed-english-v3.0 | 43s |

| Cohere embed-english-light-v3.0 | 16s |

| OpenAI text-embedding-3-small | 95s |

| OpenAI text-embedding-3-large | 151s |

| Cloudflare bge-small-en-v1.5 | 152s |

| Cloudflare bge-large-en-v1.5 | 159s |

| Jina Colbert V2 | 375s |

| VoyageAI voyage-large-2 | 409s |

| Mistral | 409s |

| Local all-MiniLM-L6-v2 | 880s |

| Local bge-small-en-v1.5 | 3379s |

| Local bge-large-en-v1.5 | 9132s |

Meilisearch benchmarks indicate that indexing times range from under a minute for optimized cloud-based solutions to several hours for certain local models without GPU acceleration.

These should be important points to ponder when you weigh the frequency and volume of data updates in your applications. This is because they directly impact how quickly your system can process frequent or large data updates, which is crucial for maintaining the performance and responsiveness of your search solution.

4. Pricing

Embedding model costs vary depending on the provider and usage model. While local models are free to run, they require computational resources, potentially necessitating GPU investment.

On the other hand, cloud-based services charge per million tokens (or thousand neurons for Cloudflare), with costs ranging from $0.02 to $0.18 per million tokens.

| Provider | Price |

|---|---|

| Cohere | $0.10 per million tokens for Embed 3 |

| OpenAI | $0.02 per million tokens for text-embedding-3-small |

| $0.10 per million tokens for text-embedding-ada-002 | |

| $0.13 per million tokens for text-embedding-3-large | |

| Cloudflare | $0.011 per 1,000 Neurons |

| Jina | $0.18 per million tokens |

| Mistral | $0.10 per million tokens |

| VoyageAI | $0.02 per million tokens for voyage-3-lite |

| $0.06 per million tokens for voyage-3 | |

| $0.12 per million tokens for voyage-multimodal-3 | |

| $0.18 per million tokens for voyage-code-3 | |

| $0.18 per million tokens for voyage-3-large | |

| Local model | Free |

As such, analyze cost-effectiveness against search demand and performance requirements. It's often a good idea to start with a well-known model that's easy to set up and has robust community support. You can migrate to a cloud provider like AWS for improved performance when necessary.

Alternatively, you can choose an open-source model to self-host, giving you more flexibility. Just be aware that optimizing local models for high volume may require scaling your infrastructure.

5. Other optimization techniques

To maximize search performance, hybrid search approaches combining full-text and vector search can yield the best results. To refine semantic search performance, consider the following optimizations:

- Experiment with model presets, as some models allow tuning for query vs. document embedding, which can improve relevancy.

- Assess specialized models, especially those employing retrieval-augmented generation, as domain-specific models may offer superior results for particular use cases.

- Explore models that provide reranking functions that further enhance search precision.

- Test higher-tier accounts as premium tiers may provide faster processing and reduced rate limiting.

- Optimize data transfer with quantization options to reduce API response sizes and improve efficiency.

Carefully evaluating these factors will help you select the best semantic search model to meet your needs.

The bottom line

Now that we’ve taken this journey together, let’s round up what we’ve discovered.

| Model/Service | Dimensions | Context Length | Latency | Indexation time | Pricing (per million tokens) |

|---|---|---|---|---|---|

| Cohere embed-english-v3.0 | 1024 | 512 | ±170ms | 43s | $0.10 |

| Cohere embed-english-light-v3.0 | 384 | 512 | ±160ms | 16s | $0.10 |

| OpenAI text-embedding-3-small | 1536 | 8192 | ±460ms | 95s | $0.02 |

| OpenAI text-embedding-3-large | 3072 | 8192 | ±750ms | 151s | $0.13 |

| Mistral | 1024 | 8192 | ±200ms | 409s | $0.10 |

| VoyageAI voyage-2 | 1024 | 4000 | ±350ms | 330s | $0.10 |

| VoyageAI voyage-large-2 | 1536 | 16000 | ±400ms | 409s | $0.12 |

| Jina Colbert v2 | 128, 96, or 64 | 8192 | ±400ms | 375s | $0.18 |

| OSS all-MiniLM-L6-v2 | 384 | 512 | ±10ms | 880s | Free |

| OSS bge-small-en-v1.5 | 1024 | 512 | ±20ms | 3379s | Free |

| OSS bge-large-en-v1.5 | 1536 | 512 | ±60ms | 9132s | Free |

Choosing the best model for semantic search depends on what (and how) you aim to achieve with your specific use case, budget, and performance requirements. For most cases, cloud-based solutions such as those provided by Cohere or OpenAI may be optimal.

As the needs of your organization grow, it might be worth the cost and effort to upgrade to a local or self-hosted solution. Understanding your own needs is paramount to making a well-informed decision.

If you’re unsure which model is best for you or if you’re looking for a tailored solution, contact a search expert at Meilisearch.

Frequently asked questions (FAQs)

How does semantic search differ from keyword-based search?

Semantic search focuses on understanding meaning, while keyword-based search relies on exact word matches.

What are the key features of a good semantic search model?

A good model should offer high accuracy, low latency, efficient indexing, and cost-effectiveness.

How do transformer-based models improve semantic search?

Transformers process text in context, capturing contextual relationships between words to enhance search relevance while reducing downtime.

How do vector databases enhance semantic search?

Vector databases work to efficiently store embeddings, enabling fast and scalable search operations.

What are some open-source models for semantic search?

Popular open-source semantic search models include all-MiniLM-L6-v2 and Universal Sentence Encoder.